Everyone at some point has encountered an argument using an appeal to nature: in a grocery store label proclaiming a product is natural and therefore good for you, in a philosophical argument about where we draw our moral ideas from, in a pop-science book or work of fiction extolling the way Native Americans lived naturally and sustainably, and in countless other contexts. Essentially, an appeal to nature is the claim that if something is naturally occurring, it follows that it is also good, justified, or healthy. However, while this is sometimes a helpful heuristic (as a rule of thumb it is generally healthier to eat lettuce than Doritos), just because something is natural doesn’t mean it’s good. In this post I’ll explore some examples of domains in which the appeal to nature fallacy often crops up, and whether it has any validity in each case. (Note: there is an unrelated logical fallacy known as the naturalistic fallacy, which is a rather arcane philosophical argument about definitions.)

Why is the fallacy so common and widespread? There is truth to the claim that since humans evolved in the natural landscape, our mental health suffers when we don’t have access to enough greenery. In a postindustrialized society where nature no longer poses a threat to our safety, it has instead become a luxury to be able to experience nature through hiking, hunting, or one of a multitude of other nature-related hobbies. Since these interactions are now rare treats, there is little wonder that nature is now considered special and good, instead of the nuisance or danger that preindustrial, postagricultural societies viewed it as. (General Sheridan once said in the 1870s, “Let them kill, skin, and sell until the buffalo are exterminated. Then your prairies can be covered with speckled cattle and the festive cowboy.” Back then, nature was something to be conquered, not revered.)

Appeals to nature have frequently been used to argue both sides of many historically important moral issues. Homosexuality, interracial marriages, new technologies or drugs, industrialization, and living in cities have all been claimed to be unnatural, and therefore bad, to name a few examples. However, despite the fact that a lot of these historical complaints have been either largely resolved and incorporated into our mainstream moral code, or forgotten about, the appeal to nature fallacy underlying the arguments has not been addressed. So, it’s no surprise that people interested in modern issues continue to use the same fallacy to argue their points.

Romanticizing the Hunter-Gatherer Lifestyle

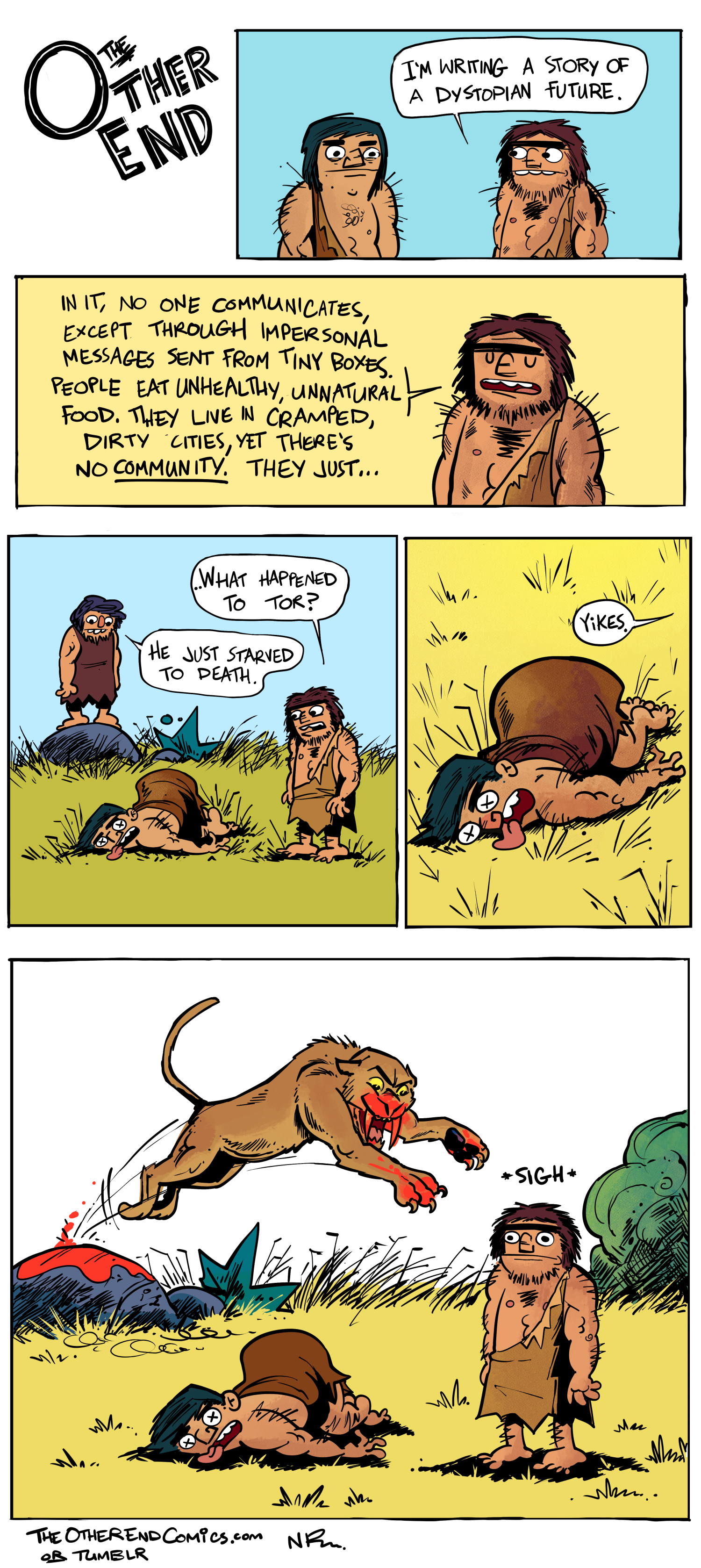

One point that is frequently made in fiction and popular science, and is very prevalent in the American psyche (I can’t speak for other countries) is the idea that preagricultural humans were healthier, happier, and morally superior to their more technologically advanced counterparts. It’s common wisdom that pre-colonial Native Americans respected the animals and ecology they depended on and took pains to use all parts of the animals and plants they hunted and foraged, and to never take more than they could use. It’s also a frequently cited figure that modern hunter-gatherers only work 3-5 hours per day in order to forage enough to sustain themselves and their families, and that in the ample free time afforded by this lifestyle, they partake in more fun and social activities, and are therefore happier. Humans have evolved naturally to live as hunter-gatherers, it is argued, so any deviation from this lifestyle makes us worse off.

There are two main categories of argument against this view: that the hunter-gatherer lifestyle is actually worse in many ways than the postindustrial one, and that even hunter-gatherer humans did not live sustainably, and frequently drove local animal species to extinction.

The first, and numerically clearest, point to mention is that child mortality, mortality during pregnancy and childbirth, and mortality and morbidity due to disease, injury, and malnutrition were much, much higher among our forager ancestors than today. These problems were also experienced in varying degrees by early agricultural and industrial peoples, but that doesn’t mean it wasn’t a fact of hunter-gatherer life as well. According to some research, around 40% of preagricultural humans would have died before the age of 15.

Another point worth mentioning is that foragers did not have the plethora of lifestyle choices that we enjoy today. There was essentially only one “career path” available to our ancestors, and since there were almost certainly individuals who would’ve been uninterested in hunting and gathering 3-5 hours each day, the average happiness and fulfilment attained by hunter-gatherer people was probably lower than that of members of a society that allows one to choose their own path in life. Today we also enjoy the resources and conveniences offered by technology and society, which give us the opportunity to become educated and be entertained in ways far beyond our ancestors’ wildest dreams.

Though preagricultural and postindustrialized humans differ drastically in terms of life expectancy and quality, they are similar in at least one respect: humans have always readily altered the environment they lived in, frequently driving certain animal and plant species to extinction. While it’s difficult to disentangle the effects of humans from the effects of a warming climate at the end of the Ice Age, it is a compelling trend that wherever Paleolithic humans arrived, megafaunal animals disappeared soon after. In places like the Caribbean, where the first humans arrived significantly after the Ice Age ended, the direct effects of human activity, including hunting, domestication, introduction of invasive species, and habitat destruction, can be unambiguously blamed for the severe extinctions that followed.

Furthermore, hunting and gathering on wild land can support vastly fewer people than growing corn shoulder-to-shoulder, and at present there just isn’t enough wild land left to support the world’s huge human population. So, even if we decided that the hunter-gatherer lifestyle was morally, qualitatively, and ecologically superior to the postindustrialized one, the option to return to the lifestyle of our ancestors en masse just doesn’t exist.

Weed People

I’m not very familiar with this community and their assertions about the near-magical properties of marijuana, but apparently there exists a relatively prominent group of diehard potheads who insist that weed is the answer to everything: cancer, seizures, obesity, Alzheimer’s, you name it. They even claim that it increases lung capacity, since the deep inhalation and breath-holding employed to extract the most drug from the smoke constitutes “lung exercise”. These beliefs stem mostly from the other logical fallacy of motivated reasoning–these people have already decided that they enjoy weed, and then work backward to try to find reasons that it’s the correct and healthy thing to do–but the appeal to nature fallacy is also thrown around. Weed is healthier and more effective than pharmaceuticals produced in a lab because it’s just a plant, it’s natural, and our brains have coevolved circuitry to exploit it, they claim. However, many of their claims are clearly dubious at best, and though the long-term effects of habitual marijuana use aren’t well understood, it is known to be linked to paranoia, delusion, and even schizophrenia.

Death, Transhumanism, and Cryonics

One surefire way to garner strange looks in a conversation is to casually and matter-of-factly mention that you plan to have your head frozen when, or even before, you die. The rationality community has been struggling to convince outsiders of the reasonableness of this plan for a long time, but it’s been a tough battle, mostly because of the appeal to nature fallacy (their other stumbling block lies in the fact that cryonic methods are still underdeveloped and unreliable). The appeal to nature argument goes as follows: since death and aging are natural parts of the way the world operates, they must be accepted, and even embraced, as part of the circle of life. But if a being from outside the system were to look at it from first principles, they would almost certainly conclude that requiring organisms to slowly become less functional over time, suffer, and then cease to exist is a pretty poor idea. I’m not convinced that curing death is feasible or a priority (even if we could figure out cryonics, we would also have to contend with the problems of overpopulation and the constant removal of biomass from the earth), but the prevalence of “circle of life” moral arguments and the discomfort evoked by discussions of cryonics and transhumanism indicate that the appeal to nature fallacy (or rather, the unappealingness of unnaturalness) is definitely in play here.

Food

Arguably, the domain in which the appeal to nature fallacy is hardest at work is in the marketing and purchasing of food. A tried and true strategy to boost sales is to change your packaging color to green and plaster promises of “all-natural” on every possible surface. There are numerous governmental regulations behind what standards need to be met in order for a food to be called organic or a chemical to be called a natural flavor, as well as laws requiring the labeling of foods containing genetically modified organisms (GMOs). In this section I’ll address a few food-related areas where the appeal to nature fallacy is most prevalent, and whether it has any basis in reality.

The Paleo Diet

One of the most salient examples of the appeal to nature fallacy in food shows up in the “Paleo” diet, which has risen to extreme popularity in the last few years. This diet claims that, since humans evolved to eat certain things, like nuts and meat, whereas things like grains, eggs, and milk only became available in the last 10,000 years, humans haven’t had time to evolve a tolerance for these new foods, and therefore we would be healthier sticking to the oldies-but-goodies of our ancestors.

There are quite a few problems with this assertion. First, the claim that 10,000 years isn’t long enough to develop mechanisms for digesting novel foods is clearly false; one example of such a mechanism is humans’ ability to digest lactose as adults, unique among mammals except for domesticates like dogs and cats. Furthermore, there is no one diet that every human’s ancestors ate. From ancient times people have populated all corners of the globe, so the diet of an ancient Canadian (consisting of almost exclusively meat from fish and seals) and the diet of an ancient sub-Saharan African (consisting of all kinds of fruits, tubers, insects, eggs, and meat) would not have had anything in common. Humans have been able to live in such varied environments in part due to our extreme dietary flexibility. Thus, it doesn’t make sense to claim that any single list of foods represents what all living humans are ideally suited to eat.

That’s not to say there are no redeeming qualities to the “Paleo” diet. Any diet that encourages eating fresh foods, counting calories and nutrition macros, and being more aware of what one is eating has the potential to be effective in improving the dieter’s health. The problem is that many people choose to put themselves on the “Paleo” diet not for these reasons, but for logically fallacious ones.

Genetically modified organisms (GMOs)

GMOs include any living thing modified by directly manipulating its genes, but the ones we’re concerned with are the ones found in food. These most commonly include crops engineered to be more disease- and pest-resistant, but more recently, nutritionally enhanced crops have become available as well, such as Golden Rice 2.0, a genetically engineered strain of rice intended to prevent vitamin A deficiency. There is also one type of genetically modified salmon that grows faster and requires less food than its wild counterparts, that is approved for consumption in the United States and Canada.

The world has had a complicated relationship with GMOs since their inception in 1973. Various countries have taken widely varying stances on regulation, with some banning them entirely, some banning some subset of their import, growth and research, and some (including the United States) passing laws requiring disclosure when included in consumer products, but not much else. But are even these light regulations necessary? Are GMOs dangerous, or even at all qualitatively different from conventionally-bred organisms?

Numerous studies have unequivocally answered, NO. In fact, so many studies have turned up this same conclusion that the American Association for the Advancement of Science, the American Medical Association, the National Academies of Science, Engineering, and Medicine, and other organizations have published statements asserting that currently available genetically modified foods are no riskier than their conventionally-bred equivalents. Yet, protest groups still vandalize fields in which new GMOs are being experimentally grown, and lobbyist groups still push for increased regulation and clearer labeling on foods containing GMOs. They’re not representing a fringe opinion either–93% of Americans polled in 2013 were in favor of mandatory labeling of GMO-including foods, and 75% expressed concerns about toxicity or GMO-related environmental problems.

This doesn’t mean GMOs are entirely benign–some weedkiller-tolerant strains have led to more aggressive use of herbicides, not less, as was expected of inherently disease- and pest-resistant crops. However, this isn’t a problem with GMOs’ unnatural status, but with economic incentives determining the traits researchers are interested in. Instead of regulating GMOs themselves, perhaps a better approach would be to restrict wanton herbicide use.

Organic

Organic foods are those whose creation comply with government-specified levels of antibiotic, herbicide, and pesticide use. Though the United States’s regulations on what can be considered organic are rather lax, organic crops do show significantly lower levels of pesticide residue and heavy metals, and higher levels of polyphenols (a plant’s innate pest-deterrent system) than non-organic. However, the trace amounts of pesticide residue and heavy metals found in conventionally-grown food have been tough to link to any deleterious health effects, and washing produce before eating significantly reduces even these amounts. Additionally, many consumers believe that since organic food is grown more naturally, it contains more vitamins and nutrients than the conventionally-grown equivalent, but this has repeatedly been shown to be untrue. The vitamin and nutrient content of a crop does vary widely between different growing conditions, but it has more to do with soil type and climate than with chemical use.

But what about the environmental aspect? Isn’t reducing the prevalence of toxic chemicals and antibiotics applied to the environment a good thing?

Yes, definitely–but due to the scale of the modern organic industry, these environmental benefits are counterbalanced by novel environmental problems. To prevent weeds from choking the crops without using weedkiller, organic farms rely on strategically timed tilling and human laborers to uproot the unwanted growth. Over-tilling destroys the soil biome of fungus, earthworms, and other decomposers, and allows trapped nitrogen to escape into the air, both of which hurt soil fertility. To improve fertility without using petrochemical fertilizer, which is banned for organic crops, organic farms turn to more exotic solutions, including importing Chilean nitrate, which is often mined by child labor. Since the market demands foods to be available year-round, seasons be damned, organic foods are flown around the world at great emissions expense (processed or preservative-laden foods can endure a slower, lower-carbon-footprint journey by boat or truck).

Again, this is not to say that eating organic is a pointless exercise–organic crops are less unsustainable in general than their factory-farmed equivalents. Organic farming companies also tend to do more to improve their environmental and moral standing by, say, granting workers benefits and planting trees, than industrial producers do. But going organic is definitely not the most effective way to lower environmental impact–it just enjoys an outsized portion of the public attention due to its perceived naturalness. Some more effective (though less convenient and less high-status) ways to reduce environmental harm done through eating include reducing food waste, avoiding specific high-impact foods such as bananas and avocados, and preferring things that are produced locally and are in season.

Much of my understanding of the pros and cons of the organic food industry comes from The Omnivore’s Dilemma by Michael Pollan.

Conclusion

Okay, but so what? In general, these examples of the appeal to nature fallacy in action are relatively low-stakes. Does it really matter if GMOs are labeled in grocery stores, or if potheads ascribe weed with godlike properties, or if some people are dieting for the wrong reasons?

I chose these examples because I thought they would be the most useful, balanced, and believable to readers who I model as being similar to myself, but there definitely exists a huge domain in which the applied appeal to nature fallacy can be deadly: alternative medicine and antivaxxers. It’s easy to dismiss people who hold such beliefs as being dumb, delusional, or otherwise qualitatively different from oneself, but it’s not a difference in kind, just in magnitude. We all fall victim to the fallacy sometimes; those who decline to receive cancer treatment in favor of homeopathy just fall harder. Those who neglect to have their children vaccinated contribute to preventable outbreaks of smallpox, pertussis, measles, and others. They conflate “unnatural” with “bad” and in doing so they put both themselves and the public in danger.

I hope this post has given you a better understanding of how we often employ the appeal to nature fallacy in our everyday perceptions and decisionmaking, what it looks like in higher concentrations, and how to spot it yourself in the wild. Thanks for reading!